Data Governance in the Media sector

In one of our clients in the «media» sector, we participate with our data governance solutions in the process of digital transformation of data architecture on Cloud environments.

Within this initiative we have incorporated different pieces on its Cloud platform, providing a complete view of the data from a single point of access.

For this, a Data Catalog and mechanisms for metadata discovery have been designed and implemented. Furthermore, our solutions allow us to manage the data definitions from a business point of view through the Business Glossary where we measure and control the quality of the information.

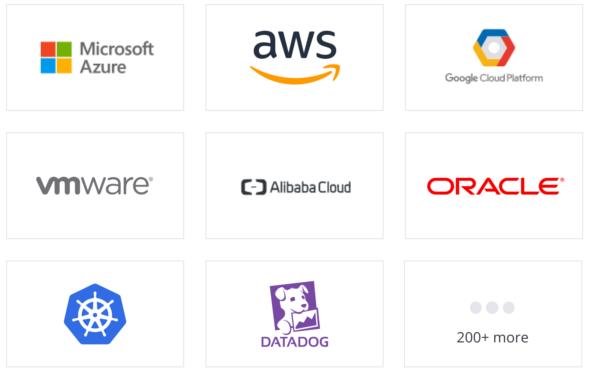

In the project, the most used technologies have been the different Azure cloud services (ADLS, Blob, ADLA, etc.)

SUCCESS STORIES